February 16, 2026

By Nehal Malik

Tesla vehicles across Europe are getting a significant intelligence boost this week as xAI’s Grok chatbot officially begins its international rollout. After making its debut in North America last year, the advanced assistant is now landing in European vehicles via software update 2026.2.6.

This is a major milestone for Tesla’s in-car experience because it represents the first time Grok has been expanded to non-English speaking markets with localized support. While North American owners have been using the chatbot for general queries since software version 2025.26, and more recently for navigation commands, European owners are receiving the general assistant and navigation support in one go.

Installed on 1.0% of fleet

Last updated: Feb 16, 4:00 pm UTC

Grok Becomes Your Personal Guide

The standout feature of this release is Grok with Navigation Commands. Unlike the standard voice system that requires rigid phrases, Grok understands natural language. You can simply talk to Grok, and it will add, edit, and organize navigation destinations for you, becoming your personal guide.

You can also ask complex, multi-step questions, such as finding a highly rated Thai restaurant near your current location or planning a sightseeing tour. It can even handle nuanced requests like finding a Supercharger that is within walking distance of a coffee shop. This level of conversational intelligence effectively turns the vehicle into a proactive assistant rather than just a passive screen.

Availability

Tesla has confirmed that the rollout has initially started in nine countries: the U.K., Ireland, Germany, Switzerland, Austria, Italy, France, Portugal, and Spain. Owners in these regions will need an active Premium Connectivity subscription and a vehicle equipped with an AMD Ryzen processor to access the feature.

To get started, you can find the assistant in the App Launcher or simply long-press the voice button on the steering wheel. Grok has different voices and personalities that you can choose from, including Assistant, Storyteller, Unhinged, Therapist, Argumentative, and more. You’re going to have to set Grok’s personality to Assistant in order to enable support for those helpful navigation commands.

What is Next for Europe?

While this initial list covers most of Western Europe, Tesla has already promised that more is to come. Based on how the company typically handles European feature releases, we can likely expect an expansion into the Nordic countries and Central Europe in the coming weeks.

This update brings European owners one step closer to the features released in North America, with FSD, hopefully, being the next big feature to arrive in Europe.

There’s still no word on when Grok will become available for vehicles with Intel infotainment units, but we hope it’s still in the plans.

Ordering a New Tesla?

Use our referral code and get 3 months free of FSD or $1,000 off your new Tesla.

February 16, 2026

By Karan Singh

After the bombshell report last year that Tesla was finally caving to consumer demand and bringing Apple CarPlay to its vehicles, the trail went surprisingly cold.

Now, thanks to new details from Bloomberg’s Mark Gurman, we know exactly why this feature has been delayed – technical challenges between Apple Maps, FSD, and sluggish adoption of the latest iOS update.

Integration with Tesla’s Map

The core issue stems from how FSD and Tesla’s built-in nav currently interact. FSD relies on the car’s native navigation system for route planning, though it may sometimes deviate from the planned route on its own. However, the vast majority of driving decisions are made based on the planned route.

During internal testing, Tesla’s engineers discovered a major conflict. If a driver has CarPlay active, Apple Maps will run simultaneously and often provide conflicting turn-by-turn guidance. Imagine the car autonomously preparing for a right turn while Apple Maps tells you to go straight.

To prevent this confusing and potentially dangerous dual-navigation scenario, Tesla requested that Apple engineer a custom fix to keep the two mapping systems in sync. Surprisingly, Apple obliged and implemented the necessary adjustments to allow the vehicle and CarPlay to communicate and keep the routes in sync.

This news makes it sound like Tesla is planning to support FSD, even if you’re using CarPlay. The user would be able to add their destination in CarPlay and activate FSD to start driving toward their destination. Behind the scenes, CarPlay would send the destination to the Tesla OS, which would then communicate back the exact route to take.

iOS 26 Bottleneck

If Apple fixed the bug, why are we still waiting? According to the report, the answer lies in the iPhone update cycle.

Apple bundled this specific Tesla compatibility fix into an incremental update for iOS 26 (specifically, a patch following the initial 26.0 release). Apple released iOS 26 in September 2025, followed by iOS 26.0.1 and 26.1, which were both released before Tesla’s holiday update. However, iOS 26.2 wasn’t released until December 12th, and more recently, iOS 26.3 was released on February 11th. While it’s not clear which version includes the fix Tesla needed, it likely came in iOS 26.2 or later.

Another point of contention is that iOS 26 adoption has been notably slower than in previous generations.

According to Apple’s own recent App Store data, only about 74% of iPhones released in the last four years are currently running iOS 26 – and an even smaller fraction are running the specific incremental patch required for the Tesla fix. The total adoption rate across all active iPhones is even lower at just 66%.

Rather than releasing a highly anticipated feature that would immediately break or be buggy for a large share of owners due to the fix not being available in their iOS version, Tesla is holding this feature back until iOS 26 adoption is higher.

More Than a Windowed Mode?

When the update does finally roll out – potentially in the upcoming Spring Update – drivers should set their expectations regarding what CarPlay will bring.

Tesla isn’t handing over the keys to the kingdom to Apple like other vendors do with CarPlay Ultra. Instead, CarPlay would run in its own app, meaning users would first need to open the CarPlay app, then select the CarPlay app they want to access. This would potentially add two docks to the Tesla infotainment while CarPlay is open, but Tesla does seem to be addressing some of these issues.

For example, if they’re making sure the route is consistent between Tesla Maps and Apple Maps in CarPlay, they may also be carrying over other details, like the song currently being played. So if users opened up Spotify on CarPlay, they’d be greeted by the current song instead of an interface that doesn’t match what they’re listening to. These features would certainly help avoid confusion caused by having multiple apps like maps, Spotify, Apple Music, etc.

One option Tesla could consider is showing only one dock at a time. If CarPlay isn’t open, you see the traditional Tesla dock with its list of apps. However, if you open CarPlay, then CarPlay hides Tesla’s dock, or potentially hides some Tesla apps that would be redundant, like Spotify.

Either way, expect CarPlay to largely run inside its own dedicated window. This compromise allows the driver to access CarPlay features and apps, while Tesla maintains control over vehicle controls, FSD visualizations, and the important information it must display on the UI.

Ultimately, Tesla’s CarPlay integration is still very much alive – you just might need to remind your friends to update their iPhones before it arrives.

February 15, 2026

By Karan Singh

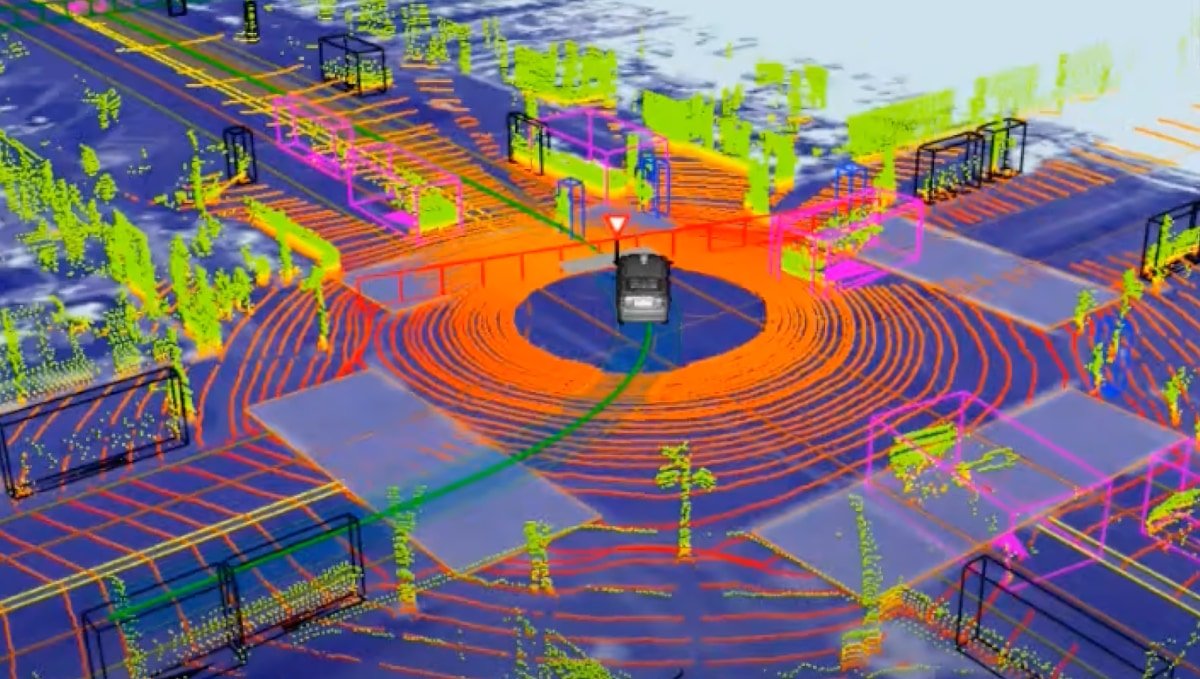

In the high-stakes race to solve autonomous driving, there’s a deep philosophical and engineering divide that has emerged over the years.

On one side stands virtually the entire automotive and tech industry, championing a concept called sensor fusion — a belt-and-suspenders approach that combines cameras, radar, and LiDAR to build a redundant, multi-layered view of the world.

On the other side stands Tesla, alone, making the bold and controversial bet on a single modality — pure, camera-based vision.

Tesla’s decision to actively remove and disable hardware like radar from its vehicles was met with widespread skepticism, but it was a move born from a deeply held, first-principles belief about the nature of intelligence, both artificial and natural. To understand just why Tesla took this bet, one must first understand what exactly Tesla rejected.

What is Sensor Fusion?

The concept of sensor fusion is fairly simple. It aims to leverage the unique strengths of different sensor types to create a single, unified, and highly robust model of the environment around a vehicle. Each sensor type has its own advantages and disadvantages, and, in theory, fusing them mitigates each type’s individual weaknesses.

Cameras provide the richest, highest-resolution data, seeing the world in color and texture much as a human does. They can read text on signs, identify the color of a traffic light, and understand complex visual context. Their primary weakness is that they can be degraded by adverse weather and low-light conditions, and they struggle to measure relative velocity.

Radar is excellent at measuring the distance and velocity of objects, even in terrible weather. It can “see” through rain, fog, and snow with ease, but its weakness lies in its lower resolution. No matter how you do the math, it would take a 12-foot by 12-foot square radar array that would cost millions to have the same resolution as a single camera, in a singular direction. It is good at telling you that something is there and how fast it’s moving – as long as it is moving – but it struggles to identify what something is, and struggles to identify objects at a standstill.

LiDAR operates like radar but uses lasers, creating a precise 3D point cloud map of the environment. It is highly accurate in measuring distance and shape, enabling it to construct a highly detailed 3D model of the environment. Its primary weaknesses are its high relative sensor cost and its performance degradation in adverse weather conditions, particularly fog, snow, and rain. LiDAR also has another weakness: the amount of data pulled in is so large that sorting through it requires immense computational effort for the first step alone.

This is the established industry approach, used by companies like Waymo and Cruise, to fuse data from all three, creating a system with built-in redundancy.

Where Tesla Started: A Multi-Sensor Approach

It’s a forgotten piece of history for many, but Tesla did not start with a vision-only approach. Early Autopilot systems, from their launch through 2021, were equipped with both cameras and a forward-facing radar unit, supplied by companies specializing in automotive sensors, like Bosch. This was a conventional sensor-fusion setup, in which the radar served as the primary sensor for measuring the distance and speed of the vehicle ahead, enabling features such as Traffic-Aware Cruise Control and early iterations of FSD Beta.

This multi-sensor approach was the standard for years. Even as Tesla developed its own custom FSD hardware, the assumption was that radar would remain a key component, a safety net for the burgeoning vision system. Then, in 2021, Tesla made a radical pivot.

The Pivot: Why Tesla Abandoned Radar

The shift began in the summer of 2021, when Tesla announced it would remove the radar from new Model 3 and Model Y vehicles and transition to a camera-only system called Tesla Vision. The move was driven by a core, first-principles argument from Elon Musk about the dangers of conflicting sensor data – an argument he continues to make today.

Lidar and radar reduce safety due to sensor contention. If lidars/radars disagree with cameras, which one wins?

This sensor ambiguity causes increased, not decreased, risk. That’s why Waymos can’t drive on highways.

We turned off the radars in Teslas to increase safety.…

— Elon Musk (@elonmusk) August 25, 2025

Elon’s argument is that sensor fusion creates a new, more dangerous problem: Sensor Contention.

When two different sensor systems provide conflicting information, which one does the car trust? Which sensor is considered the “more precise” or “safer” sensor? Is it up to the car in the moment? Is it something decided by the engineers in advance? Sensor ambiguity poses a risk because the decision-making element can be paralyzing, especially when safety is prioritized.

This isn’t just a philosophical argument, either, and Tesla’s FSD engineers have provided concrete examples. In the same thread, Tesla AI Engineer Yun-Ta Tsai noted that radar has fundamental weaknesses – it cannot properly differentiate stationary objects that cannot produce frequency shifts, objects with thin cross-sections, or objects with low radar reflectivity. This is the source of the infamous phantom braking events that plagued Tesla in the past, where a car might see a stationary overpass or discarded aluminum can on the roadside and mistake it for a stopped vehicle, causing it to brake unnecessarily.

From Tesla’s perspective, the road to a generalized solution to vehicle autonomy is to solve vision. Humans drive with two biological cameras and a powerful neural network. The bet here is that if you can make computer vision work perfectly, any other sensor is, at best, a distraction, and at worst, a source of dangerous ambiguity.

Where We Are Today: The Vision on Vision

Today, every new Tesla relies solely on Tesla Vision, powered by its eight cameras. The system uses a sophisticated neural network to create a 3D vector-space representation of the world, which the car then analyzes and navigates within.

The story about vision has a curious footnote. When Tesla launched its Hardware 4 (now AI4), the new Model S and Model X vehicles were equipped with a new, high-definition radar. However, in a move that solidified their commitment to the vision-only path, Tesla has never activated these radars for use in FSD.

In fact, FSD is actually the most evolved on the Model Y, Tesla’s most ubiquitous vehicle, rather than the ones with the additional sensors. While Tesla likely does gather some data from those radars and validates system performance, they aren’t actually part of the FSD suite.

A Binary Outcome

Tesla’s decision to abandon sensor fusion is the biggest single differentiator between its approach to autonomy and that of the rest of the industry. It is a high-stakes, all-or-nothing gamble, which they’re definitely winning so far.

Tesla, Elon, Ashok, and the Tesla AI team all believe that the only path to a scalable, general-purpose autonomous system that can navigate the world with human-like intelligence is to solve the problem of vision completely. If they are right, they will have created a system that is far cheaper and infinitely more scalable than the expensive, sensor-laden vehicles from competitors.

If they are wrong, they may eventually hit a performance ceiling that can only be overcome by those very sensors – but so far, we’ve seen neither hide nor hair of such a ceiling.

Today, Tesla is all-in on its vision-only system, and no one can deny its progress or capability.